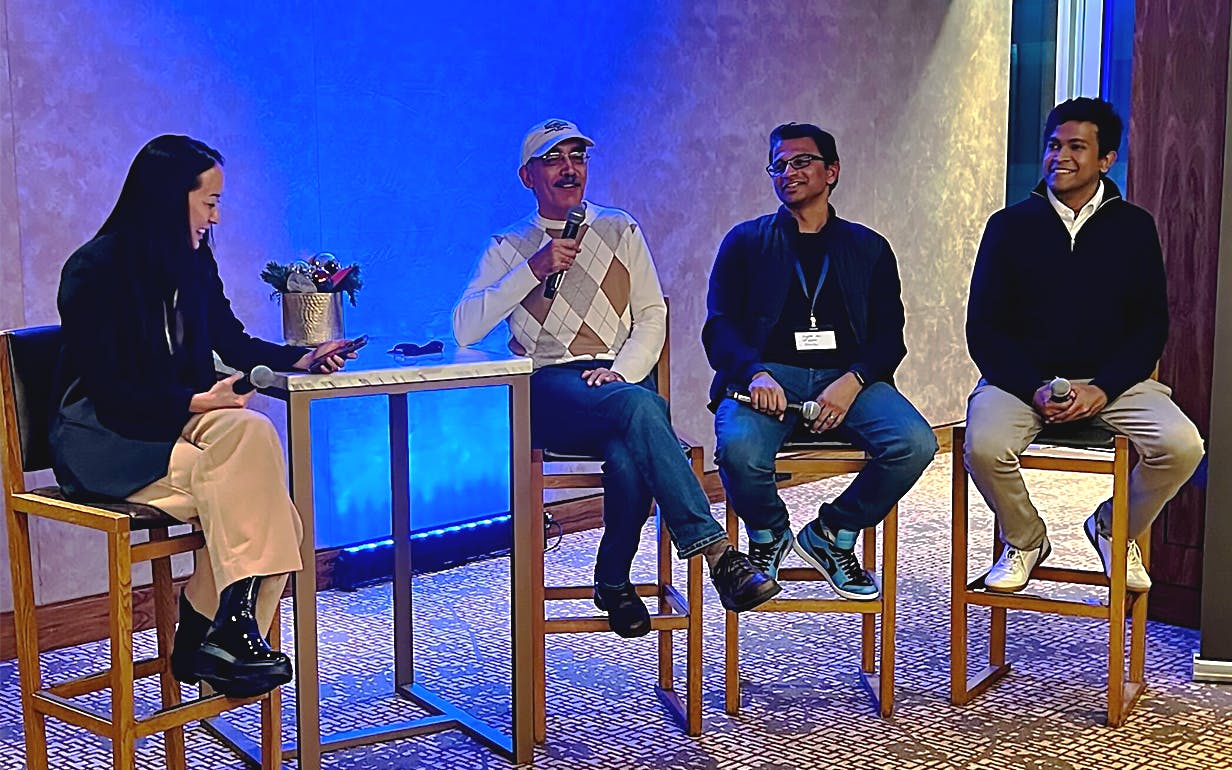

On December 11, 2024, Turing hosted a fireside chat at NeurIPS in Vancouver, bringing together industry leaders from Turing, Oracle, and IBM Research to discuss the cutting edge of AI and its path toward AGI.

Moderated by Anna Tong from Reuters, the panel included:

- Sujith Ravi, VP of GenAI at Oracle

- Ruchir Puri, Chief Scientist at IBM Research

- Jonathan Siddharth, Co-Founder & CEO at Turing

The panelists engaged in a dynamic conversation about the state of AI in 2024, addressing key achievements, opportunities for advancements, and critical challenges shaping the trajectory toward AGI.

Reflecting on the state of AI in 2024

The discussion opened with reflections on the current AI landscape, which has seen extraordinary growth and adoption in the past year.

Puri highlighted how 2024 marked a significant democratization of LLMs.

He observed that the value of AI has shifted from model development to their practical applications. “The value is shifting away from only a model to what you can do with those models. That’s what excites me most: what [LLMs] can do from a usefulness point of view as we move into next year.”

Ravi emphasized the transformation in LLM capabilities as new teams gain access to this technology, noting a critical driver of success: “Adoption, adoption, adoption!”

He continued, “The number of people who are able to access these technologies and do interesting things with them has increased x-fold. That just means more smart people looking at interesting problems than ever before.”

Critical AI Advancements in 2024

The panel identified several pivotal themes driving progress in AI over the past year, framing them as essential building blocks for the technology’s future:

Coding as a cornerstone for AI advancement

Siddharth emphasized that coding remains at the heart of AI’s reasoning abilities. He described it as part of a trifecta alongside data analysis and tool use, which he likened to “Prometheus teaching fire to humankind.”

Proficiency in these areas, Siddharth argued, is vital for automating processes like AI research and software engineering. “Coding isn’t just a technical skill; it’s the foundation for reasoning tasks that push AI closer to general intelligence.”

Multimodal reasoning (beyond text)

The second major theme was the shift from text-based models to multimodal systems capable of integrating text, audio, video, images, and computer interactions.

Siddharth highlighted the growing importance of this ability, explaining, “Multimodal reasoning has become really, really important. Can you teach a model to use a computer, accomplish basic tasks, and workflows? That’s a big theme.”

This shift opens doors to more versatile and practical AI applications, from visual diagnostics in healthcare to fully integrated enterprise solutions.

Advanced generalization for global applications

Puri spoke to the strides made in generalization—a key characteristic for AI systems to move beyond narrow, task-specific applications. “I haven’t seen as general a technology in my lifetime,” he remarked. “The generalization part is what excites me most.”

This ability to generalize allows AI to tackle a diverse array of challenges across industries and functions, pushing the possibilities of what these models can do.

Usefulness and real-world impact

The panelists underscored the importance of prioritizing useful AI over abstract notions of intelligence. Ravi reflected on how machine learning has always aimed to approximate human intelligence, explaining, “The utility of this is real. We are at the early stages of blurring the boundary between the digital and physical worlds.”

“I’m going to let you in on a little secret,” Siddharth mused to the crowd. “We talk about AGI in public; in private, we talk evals. It’s all about evaluations on query distributions that product owners care about. That’s the only systematic way we know to improve these systems.”

For example, referring to legacy systems like COBOL, he stated, “19 out of 20 transactions when you swipe your credit card hit a piece of COBOL code. Refactoring and modernizing such systems create immense value.”

Puri echoed this sentiment, pointing out that addressing such “trillion-dollar problems” could be transformative for industries worldwide.

This commitment to practical evaluation underscores the shift toward outcomes-driven development. By focusing on what users need in real-world scenarios, the industry is ensuring that AI systems deliver tangible value and reliability.

Considerations for AGI advancement

The discussion also touched on broader philosophical and practical questions surrounding AGI.

- Defining superintelligence – Puri pointed out the challenges in defining superintelligence, since we keep pushing the boundaries of what we previously thought was possible. “What is superintelligence? We thought chess was the pinnacle of intelligence. Now machines have surpassed humans in chess, but does that make them superintelligent?” His point underscored the fluidity and cultural context of how intelligence is defined and valued. He also shared advice from his mentor on avoiding the term “optimal,” noting, “Real life is so complex that ‘optimal’ often lacks practical meaning.”

- Expanding intelligence to cover EQ – Puri advocated for expanding the definition of intelligence to include emotional and relational quotients, which remain largely unexplored in AI systems. “To get anything done in real life, those three quotients have to come together. IQ, EQ, and RQ [relationship quotient] must align.” Emotional and relational intelligence are less explored but are vital for achieving practical, human-centered AGI.

- Resource efficiency – All panelists highlighted the need for energy-efficient AI. Ravi remarked, “Every brain in this room runs on 20 watts and sandwiches. The day we achieve that efficiency with AI, I’ll agree we’ve got [true] AGI.” His remark highlighted the energy demands of modern AI systems and the importance of sustainable advancements.

Looking ahead to AI in 2025

As the panel concluded, the speakers shared their visions for the year ahead.

Siddharth highlighted the potential of AI to automate and enhance knowledge work across industries, stating, “For every industry, every function, every role, every task, that’s $30 trillion of knowledge work every year. The ceiling for AGI is not just the intelligence of a single human but a portfolio of humans collaborating to do complex tasks.”

Ravi urged researchers to focus on hard problems that extend beyond immediate commercial applications, adding, “It’s an opportunity for the community to look at problems that aren’t apparent today but could become seeds for the next wave of innovation.”

Puri echoed this sentiment, expressing excitement about the shift from feedforward systems to interactive, feedback-driven AI that adapts in real time. “The shift upon us is from building intelligence at training time to building intelligence at inference time, where systems tune to what they’re seeing in the moment,” he explained.

Conclusion

By bringing together leading minds from research and development, the event offered a unique opportunity to reflect on how far AI has come and what lies ahead. The conversations underscored the progress made in 2024, and highlighted the human aspect of AI advancement.

As AI continues to evolve, events like these drive home the importance of fostering collaboration across disciplines to address the complex challenges of building AGI. The discussions from this evening will undoubtedly inspire further innovation and dialogue in the months to come.

MG Stephenson

Want to accelerate your business with AI?

Talk to one of our solutions architects and start innovating with AI-powered talent.