Math Foundations for Data Science

•9 min read

- Languages, frameworks, tools, and trends

Mathematical foundations are essential to understand the principles of data science, as it is a field that uses mathematical and statistical techniques to extract knowledge and insights from data. In the field of data science, making sense of data requires a combination of tools, methods, and techniques. These tools and techniques have evolved, but in math for data science, there are fundamental mathematical principles that are still preserved. They serve as the building blocks for building robust tools used in developing data-driven solutions.

Data science combines statistics, mathematics, artificial intelligence(AI), advanced analytics, and programming to unravel hidden and actionable insights from data. Businesses can make informed decisions using the results from the analysis. Through this analysis, data scientists can ask questions such as what happened, what will happen, how did it happen, why did it happen, and how can the results be useful.

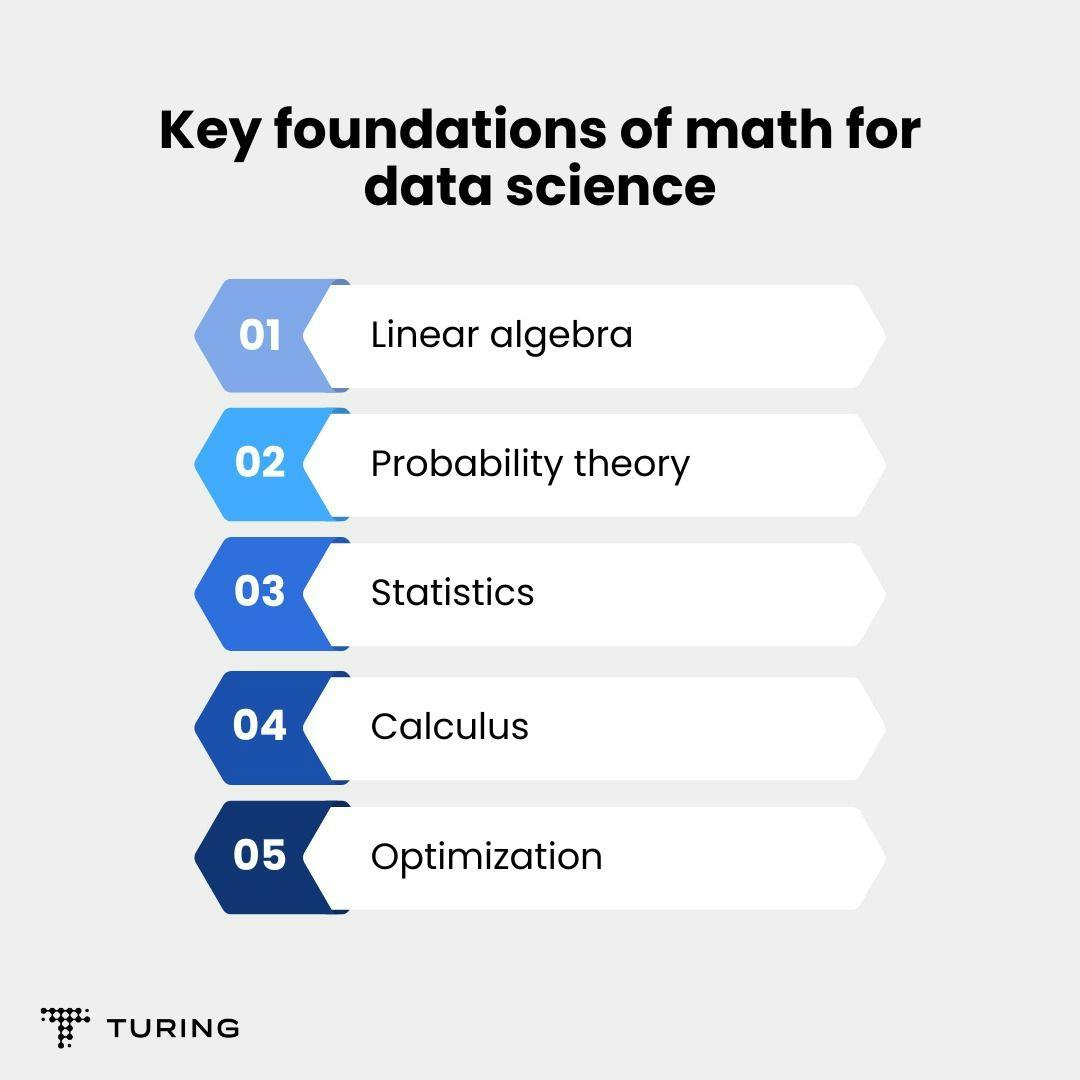

Important mathematical foundation for data science

The mathematical foundations for data science include a variety of topics such as linear algebra, probability theory, statistics, calculus, and optimization. In this article, we will explore these topics in detail and explain why they are important for data science.

Linear algebra

Linear algebra is a powerful branch of math for data science. It provides a way to represent and manipulate high-dimensional data in a compact and efficient form. In addition to its practical applications, linear algebra has a beautiful underlying structure that makes it a fascinating subject.

One of the unique aspects of linear algebra is the way it allows us to think about vectors and matrices, geometrically. For example, we can think of a matrix as a linear transformation that maps one vector space to another. The properties of this transformation, such as its eigenvalues and eigenvectors, can reveal important information about the structure of the data we are working with.

Another unique aspect of linear algebra is the way it connects different areas of mathematics. For example, linear algebra is intimately connected with calculus and differential equations. This connection allows us to use linear algebra to solve complex problems in other areas of mathematics and science.

In data science, linear algebra is used in a wide range of applications, such as machine learning, data compression, and image processing. For example, in machine learning, linear algebra is used to represent the parameters of a model and to perform operations such as matrix multiplication and matrix inversion. These operations are essential for training and evaluating models that can learn patterns in data and make predictions about new data.

In conclusion, linear algebra is a fundamental branch of mathematics that is uniquely suited to meet the needs of data science. Its geometric interpretation and connections to other areas of mathematics make it a fascinating subject, while its practical applications make it an essential tool for working with high-dimensional data. No matter how evolved and advanced data science becomes, linear algebra will remain a core component of the field, playing a crucial role in many innovative and impactful applications.

Probability theory

Probability theory is a branch of math for data science that provides a set of tools for dealing with uncertainty and randomness. It is an essential foundation for data science, providing a way to quantify the likelihood of different outcomes and to make predictions about future events based on past observations. In particular, probability theory is used in data science to help us model and understand the behavior of complex systems and to make informed decisions based on data.

One of the key applications of probability theory in data science is Bayesian inference. This involves using Bayes' theorem to update our beliefs about the probability of different events based on new information. For example, in a medical study, we might use Bayesian inference to update our beliefs about the likelihood of a disease based on new diagnostic tests.

Probability theory is also used in machine learning, which is a branch of data science that focuses on building models that can learn patterns in data and make predictions about new data. In particular, probability theory is used to estimate the parameters of a model and to evaluate the model's performance. For example, in a classification model, we might use probability theory to estimate the probability of each class given in a set of input features.

In addition to Bayesian inference and machine learning, probability theory is used in many other areas of data science, such as experimental design, statistical inference, and decision analysis. For example, probability theory is used in experimental design to determine the optimal sample size needed to detect a significant difference between two groups.

In conclusion, probability theory is a fundamental branch of mathematics that is essential for many applications in data science. Whether it's Bayesian inference, machine learning, or experimental design, probability theory provides the tools necessary to work with uncertain and complex datasets. As the field of data science continues to grow and evolve, probability theory will likely remain a core component of the field, powering many of its most innovative and impactful applications.

Statistics

Statistics is a branch of math for data science that provides a set of tools for analyzing and interpreting data. From descriptive statistics to inferential statistics, this field provides a variety of techniques for extracting insights from data and making predictions about the world. In particular, statistics is used in data science to make sense of the patterns and relationships that exist within complex datasets.

One of the key applications of statistics in data science is in hypothesis testing. This involves formulating a hypothesis about a relationship between variables and using statistical techniques to test whether the evidence supports or refutes the hypothesis. For example, in a medical study, we might want to test whether a new drug is more effective than a placebo. We could use statistical techniques such as a t-test or ANOVA to analyze the data and draw conclusions about the effectiveness of the drug.

Statistics is also used in machine learning, which is a branch of data science that focuses on building models that can learn patterns in data and make predictions about new data. In particular, statistics is used to estimate the parameters of a model and to evaluate the model's performance. For example, in a linear regression model, we might use statistics to estimate the coefficients of the model and to test whether the model is a good fit for the data.

In addition to hypothesis testing and machine learning, statistics is used in many other areas of data science, such as data visualization, experimental design, and sampling techniques. For example, statistics is used in data visualization to summarize and display data in a meaningful way, making it easier to identify patterns and relationships.

In conclusion, statistics is a fundamental branch of mathematics that is essential for many applications in data science. Whether it's hypothesis testing, machine learning, or data visualization, statistics provides the tools necessary to work with complex and high-dimensional datasets.

Calculus

Calculus is a branch of math for data science that deals with the study of rates of change and how things vary over time. It is a foundation for many applications in data science, providing a set of tools that are essential for working with large datasets and complex models. In particular, calculus is used in data science to perform optimization, which involves finding the best solution to a problem subject to certain constraints.

One of the most important applications of calculus in data science is gradient descent, which is a key optimization algorithm used in machine learning. Gradient descent involves updating the parameters of a model to minimize or maximize an objective function. Calculus is used to compute the gradient of the objective function, which provides the direction of the steepest ascent or descent. By iteratively updating the parameters in the direction of the negative gradient, the algorithm can find the optimal solution to the problem.

Calculus is also used in other optimization techniques such as Newton's method and quasi-Newton methods, which involve finding the roots of a function by iteratively improving an initial guess. These methods are used in a wide range of applications in data science, including optimization-based machine learning, data fitting, and statistical modeling.

In addition to optimization, calculus is also used in many other areas of data science, such as time-series analysis, signal processing, and dynamical systems. For example, calculus is used in time-series analysis to compute derivatives and integrals of data, which can be used to identify trends and patterns in the data.

In conclusion, calculus is a fundamental branch of mathematics that is essential for many applications in data science. Whether it is performing optimization, analyzing time-series data, or modeling dynamical systems, calculus provides the tools necessary to work with large and complex datasets.

Optimization

Optimization is a fundamental concept in math for data science that serves as a foundation for many applications. At its core, optimization is the process of finding the best solution to a problem, subject to certain constraints. In data science, optimization is used to solve a wide range of problems, including finding the best model parameters, identifying important features in a dataset, and clustering data points.

One of the primary tools used in optimization is calculus. Calculus provides a framework for computing the gradient of a function, which is used to find the direction of the steepest ascent or descent. In optimization, this gradient is used to update the parameters of a model to minimize or maximize an objective function. This process is known as gradient descent and is a foundational concept in machine learning.

Another important mathematical concept used in optimization is linear algebra. Linear algebra provides the tools necessary for working with matrices and vectors, which are essential for representing and manipulating data. In optimization, linear algebra is used to solve systems of equations and to compute the eigenvectors and eigenvalues of a matrix, which are used in principal component analysis and other dimensionality reduction techniques.

Optimization is also closely related to probability and statistics, which are used in many data science applications. For example, in machine learning, probability is used to model the likelihood of an event, and statistics is used to estimate the parameters of a model based on observed data. In optimization, these concepts are used to find the optimal solution to a problem while accounting for uncertainty and noise in the data.

As data science continues to evolve, new optimization techniques are being developed to handle increasingly complex and large-scale datasets. One such technique is stochastic gradient descent, which uses random sampling to update model parameters and is well-suited for handling large datasets. Another technique is convex optimization, which is used to find the optimal solution to a problem subject to convex constraints and is widely used in machine learning and other data science applications.

Therefore, optimization is a fundamental concept in mathematics that serves as a foundation for many applications in data science. From machine learning to dimensionality reduction, optimization plays a critical role in helping data scientists to extract insights and make predictions from complex and high-dimensional datasets. As data science continues to grow and evolve, it is likely that optimization will remain a core component of the field, powering many of its most innovative and impactful applications.

Conclusion

Math foundations for data science are essential to understanding the principles of data science. Linear algebra is used to represent data and algorithms, probability theory is used to model uncertainty and to make predictions based on data, statistics is used to infer knowledge from data and to make predictions based on data, calculus is used to model the behavior of complex systems and to optimize functions, and optimization is used to find the best solution to a problem.

In order to become proficient in data science, it is important to have a solid understanding of these mathematical foundations. This can be achieved through self-study, online courses, and formal education. By mastering these foundational concepts, data scientists can develop models that accurately predict and explain real-world phenomena, leading to better decision-making and improved outcomes.

Author

Arinze Ugwu

Arinze is an experienced Data Scientist (ML), driven by a strong desire to solve business challenges with Advanced technologies. He is also passionate about sharing knowledge through technical writing.