Using Deep Learning to Design Real-time Face Detection and Recognition Systems

•6 min read

- Languages, frameworks, tools, and trends

Deep learning is a subset of machine learning and is solely concerned with complex algorithms. It has helped develop impressive features like automatic parking in cars, image analytics in healthcare, virtual assistance, and many more. It has also expanded its applications in visual recognition. This article will look at how deep learning is used in face detection and recognition systems.

Face detection vs. face recognition: what's the difference?

Face detection

In a psychological process, face detection means locating and attending to faces in a visual scene. But in deep learning, it consists of detecting human faces by identifying the features of a human face from images or video streams.

Face recognition

This involves uniquely identifying a person’s face from a digital image or a video source. Face recognition has been in development since the 1960s, but thanks to advancements in technologies like IoT, AI, ML, etc., it has quickly become popular amongst the masses.

Face recognition technology has reached such advanced heights that it is now used in multiple places/situations. Some of them are:

- Airline departure: Some airlines use this technology to identify passengers. It saves a lot of time and eliminates the hassle of manually keeping track of passengers’ tickets/boarding passes.

- Healthcare apps: Apps like Face2Gene use facial recognition technology to identify certain disorders in patients. It does so by analyzing the faces of patients and comparing them to its database of patients who already suffer from various disorders.

- Social media: Tech giants like Facebook and Instagram use facial recognition process to spot faces when a picture is uploaded on their platforms. Users are asked if they want to tag people from their network. In this way, companies create a larger network that helps them to increase the reach of content uploaded on the platforms.

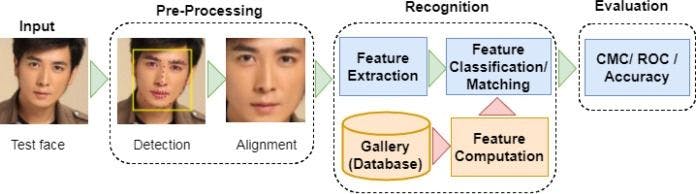

Steps involved in face recognition

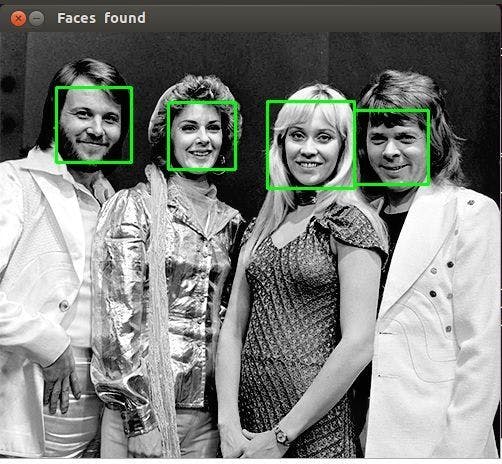

1. Face detection: This is the basic step, where the face is detected and bounding boxes are drawn around it.

2. Face alignment: The detected face is normalized to speed up training. Experiments show that alignment alone increases face recognition accuracy by almost 1%.

3. Feature extraction: Local features are extracted from the image with the help of algorithms.

4. Face recognition: This is the last stage and involves matching the input face with images present in the dataset to identify who it belongs to.

Deep learning methods for face detection and recognition

1. DeepFace

- A lightweight face recognition framework for Python.

- A hybrid method that wraps state-of-the-art models like VGGFace, FaceNet, DeepFace, Dlib, etc.

- Mainly uses three modules: DeepFace, OpenCV (for reading input images), and Matplotlib (for visualization).

Implementation

Install all the required libraries in your Python environment:

Import the installed libraries:

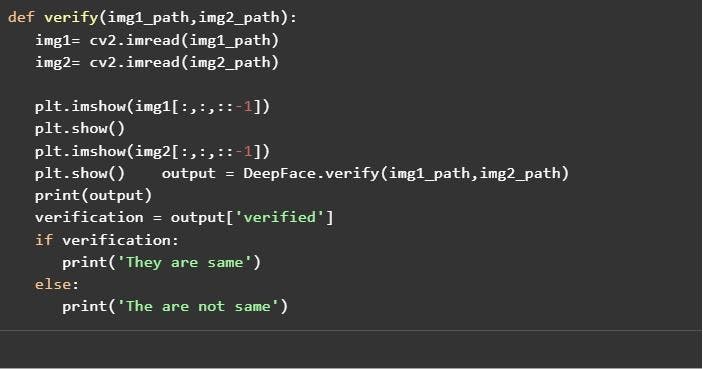

Function to read and verify the images:

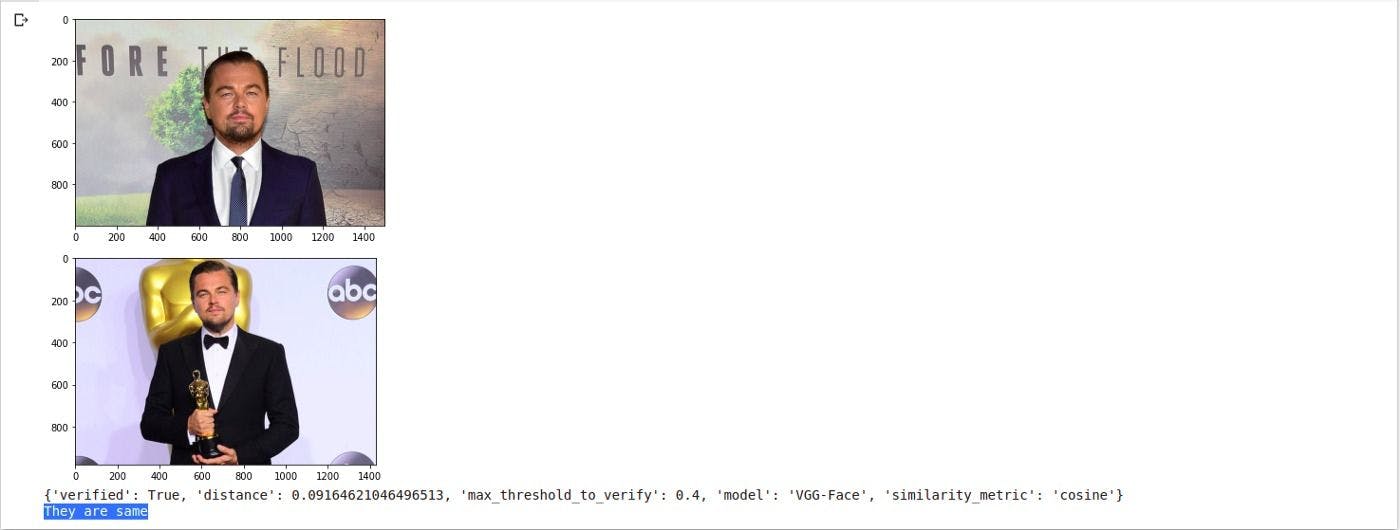

This function verifies face pairs as the same or different persons. It passes image paths as inputs and returns a dictionary with the results.

Call the verify function:

Output: They are the same.

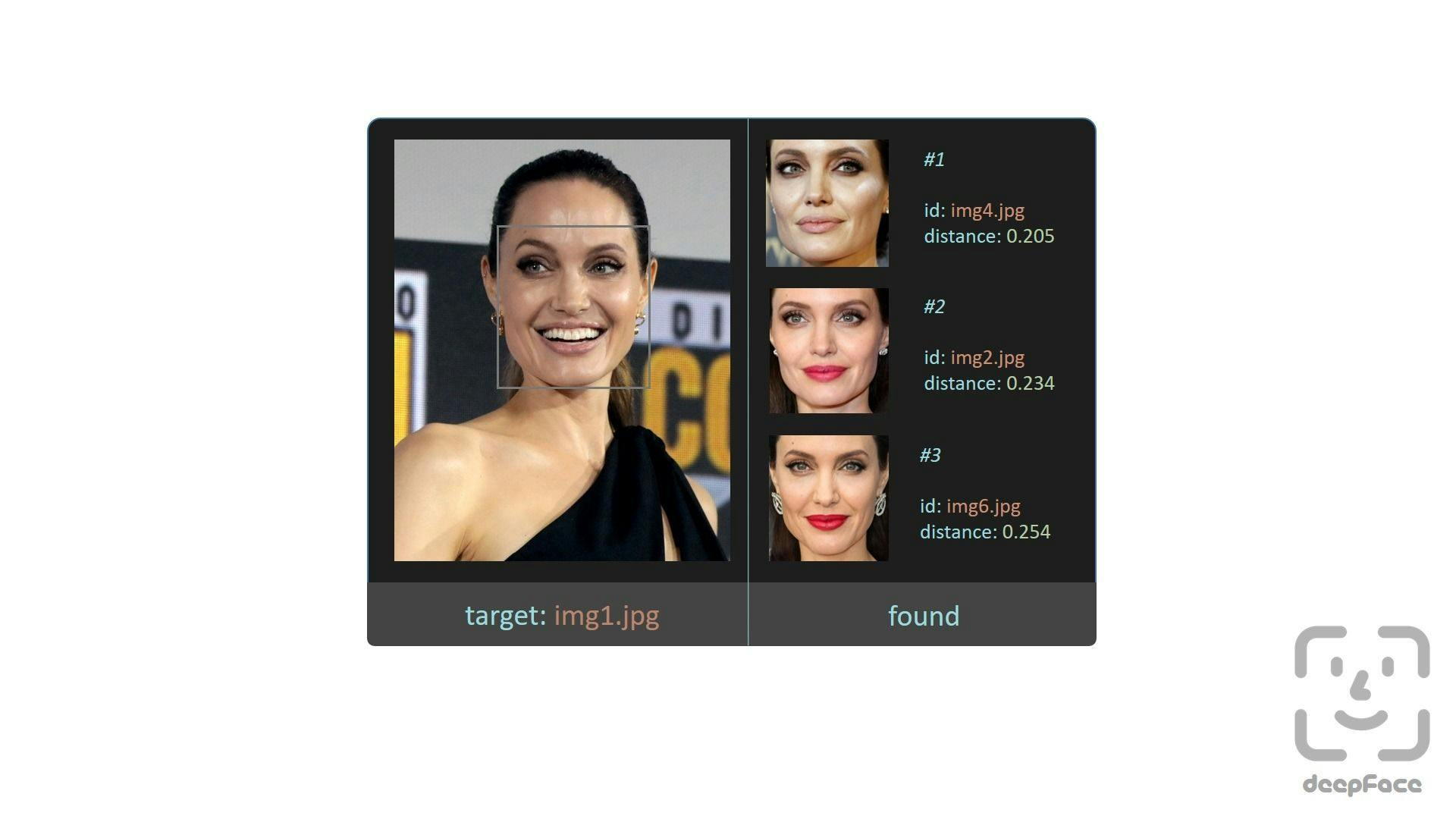

This requires applying face verification many times. DeepFace finds the identity of the input image in the database path and then returns a Pandas dataframe as the output.

Output:

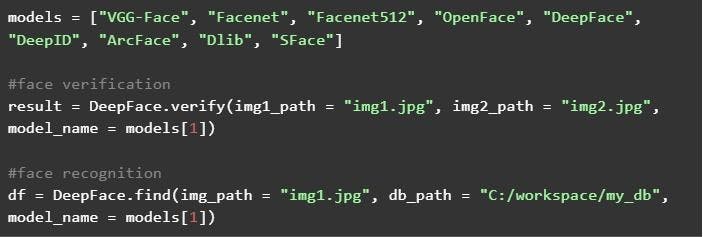

Face recognition models

As discussed, DeepFace is a hybrid face recognition package that wraps state-of-the-art models such as VGG-Face, FaceNet, OpenFace, Facebook DeepFace, DeepID, ArcFace, Dlib, and SFace.

By default, DeepFace uses the VGG-Face model.

Similarly, you can loop on the models list and test other models.

Accuracy of the models

All of the models listed above produce an accuracy of almost 99% on the Labeled Faces in the Wild benchmark.

Real-time analysis

DeepFace can be employed for real-time videos as well. A stream function can be used to access a webcam and apply face recognition and analysis.

2. Face_recognition library

The face_recognition library is built on deep learning techniques and uses only a single training image.

How face_recognition works

- Passes a person's picture with their name to the model.

- The model takes every picture and after converting them to numerical encoding, stores them in a list with the labels in another list.

- When making a prediction, the model again converts the input image to an encoding.

- This encoded image is used to find similar encodings based on the distance. The least distant encodings will be the closest match for the person.

- Once the closest match is found, the final detected person’s name is produced.

Implementation

Install the required modules:

Dlib: This is a modern C++ toolkit that has machine learning-related algorithms and tools.

OpenCV: This is required for image preprocessing.

Import libraries after installation:

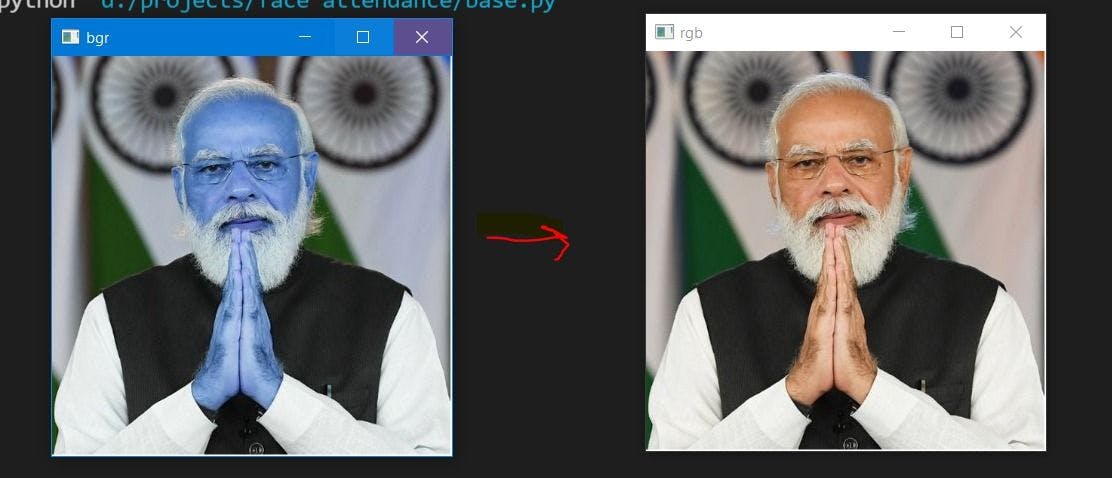

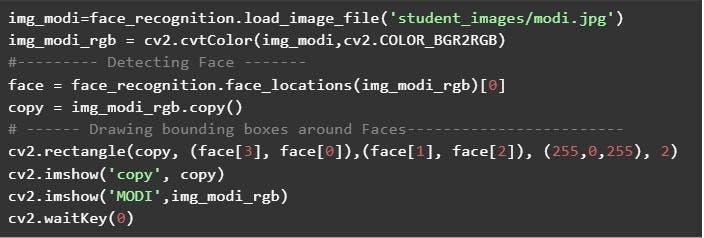

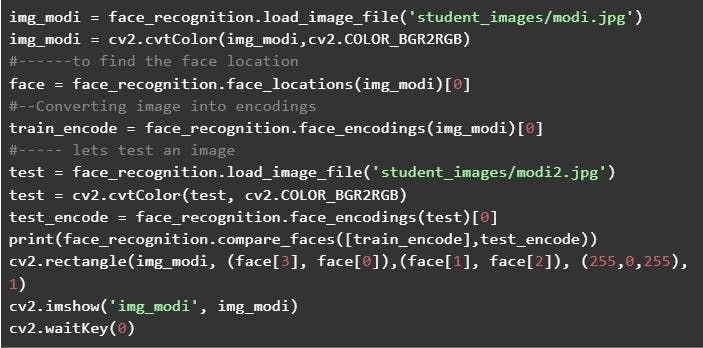

Load sample image to face_recognition library:

cv2.cvtColor(img_bgr,cv2.COLOR_BGR2RGB) - OpenCV is used here because face_recogniton supports only BGR format images. In order to print images, we need to convert them back to RGB using OpenCV.

Detecting and locating faces: Face_recognition quickly locates faces on its own without the use of haar cascade or any other similar technique.

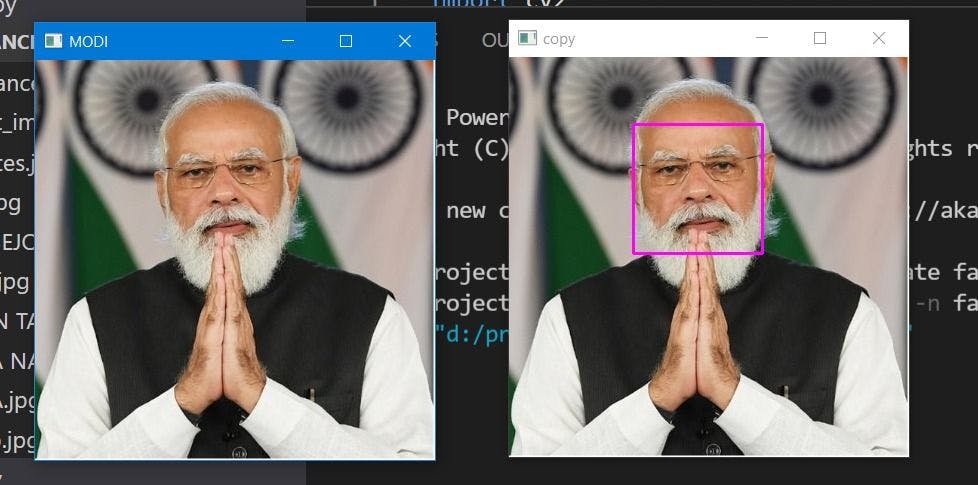

Face detection is performed successfully by face_recognition library.

Face_recogniton can detect a person in a single shot, i.e., it only needs one image because it is based on deep learning.

The output is True because both input images were of the same person.

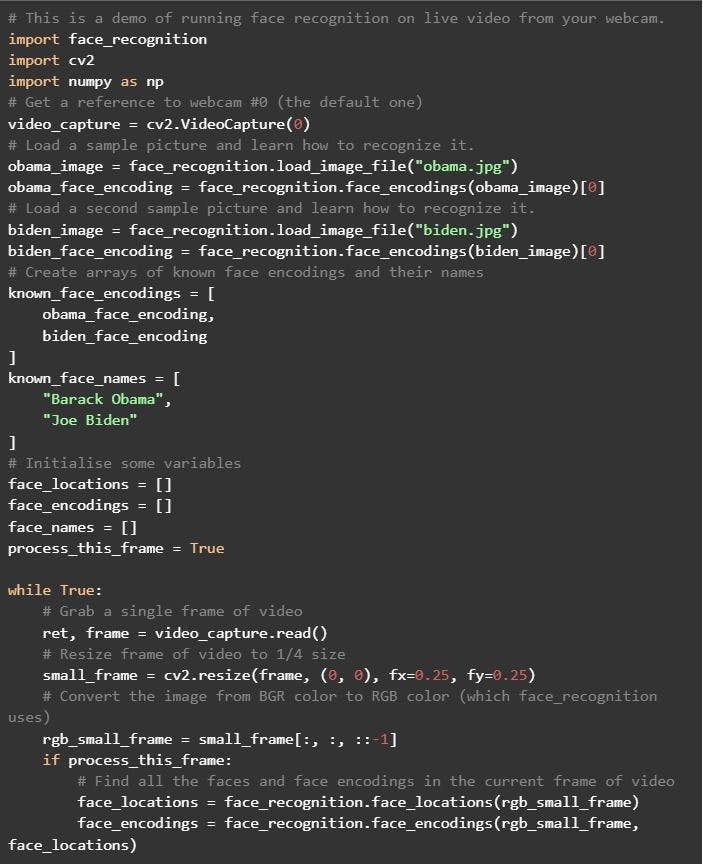

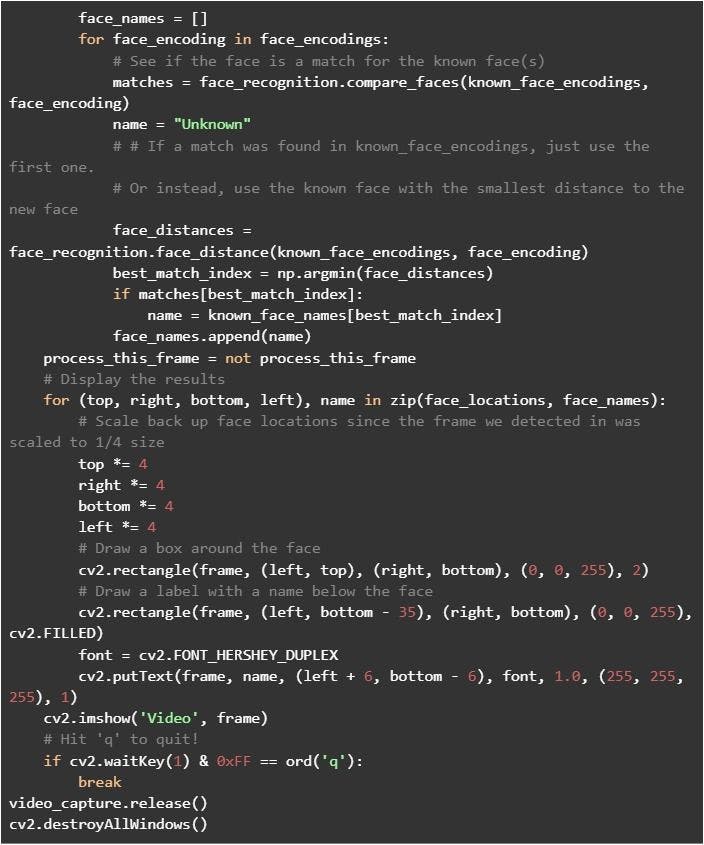

This library can be used for real-time face recognition with the following code:

Challenges in face recognition systems

The accuracy of facial recognition systems depends upon certain factors. And these factors themselves present a challenge.

- Pose: How a person poses is very important for successful recognition. If the face is not visible, the facial recognition system will fail.

- Facial expression: Sometimes, different facial expressions can result in different predictions for the same person’s image.

- Illumination: Images or video streams with poor lighting can result in bad predictions.

- Resolution: Low-resolution pictures contain less information and, hence, are not considered suitable for recognition purposes.

Aside from DeepFace and the face_recognition module, there are many more methods that can be used to implement real-time face recognition systems using deep learning. Python Tkinter is one such and can be implemented to design GUI-based real-time applications.

FAQs

1. How does deep learning upgrade face recognition software?

Once face recognition software is developed, it will need upgrades in time to maintain consistency in performance. Developing such systems in the first place relies on two common deep learning methods:

a. Pre-trained models: One method is to use already trained models, such as DeepFace by Facebook, FaceNet by Google, Dlib, etc. They have a set of algorithms required for face recognition. They are trained on very big datasets and usually do not require any more training. However, if fine-tuning is needed, it can be done and the model can be used in the software.

b. Developing neural networks from scratch: Creating complex face recognition systems requires a lot more time and effort as well as millions of images in the training dataset - unlike with pre-trained models that need only a few images. Once the model is ready with a minimal percentage of errors, it is set to be used in face recognition software.

If major changes occur in a model’s parameters or training parameters, such trained models may require re-training on the new images collected. This can easily be done at the backend as the models are usually deployed as APIs.

2. Which machine learning algorithm is the best for face recognition?

ArcFace is currently considered to be the best recognition model with respect to the challenges discussed in this article as well as other scenarios such as:

- Constrained and unconstrained environments

- Low-resolution, blurry, pose invariant, illumination issues

- Age-invariant or cross-age face recognition (adult, child)

- Mask-wearing face recognition.