How to Overcome LLM Training Challenges

•11 min read

- LLM training and enhancement

Large language models (LLMs) like Google's Gemini and OpenAI’s ChatGPT are rapidly changing the business landscape. These powerful AI tools transform how we interact with machines and provide services that range from writing assistance to customer support to complex problem-solving. A new report by Pragma Market Research estimates that by 2030, the global LLM market size will reach over US$259 billion, which reflects the immense potential for efficiency and innovation across various industries.

However, the great potential of LLMs comes with significant challenges to training them. Many companies struggle to fully embrace LLMs due to limitations like a lack of high-quality datasets for training, bias in AI understanding and output, insufficient computational resources, and the complexity of training these models. Adding to this pressure, IDC research found that 56 percent of executives feel significant pressure to adopt generative AI (GenAI). Without tackling these hurdles, the true potential of LLMs cannot be realized, and businesses may fall behind in the competitive race to harness AI.

This blog offers a comprehensive guide to navigating the complexities of LLM training and best practices for training LLMs. Let’s get started!

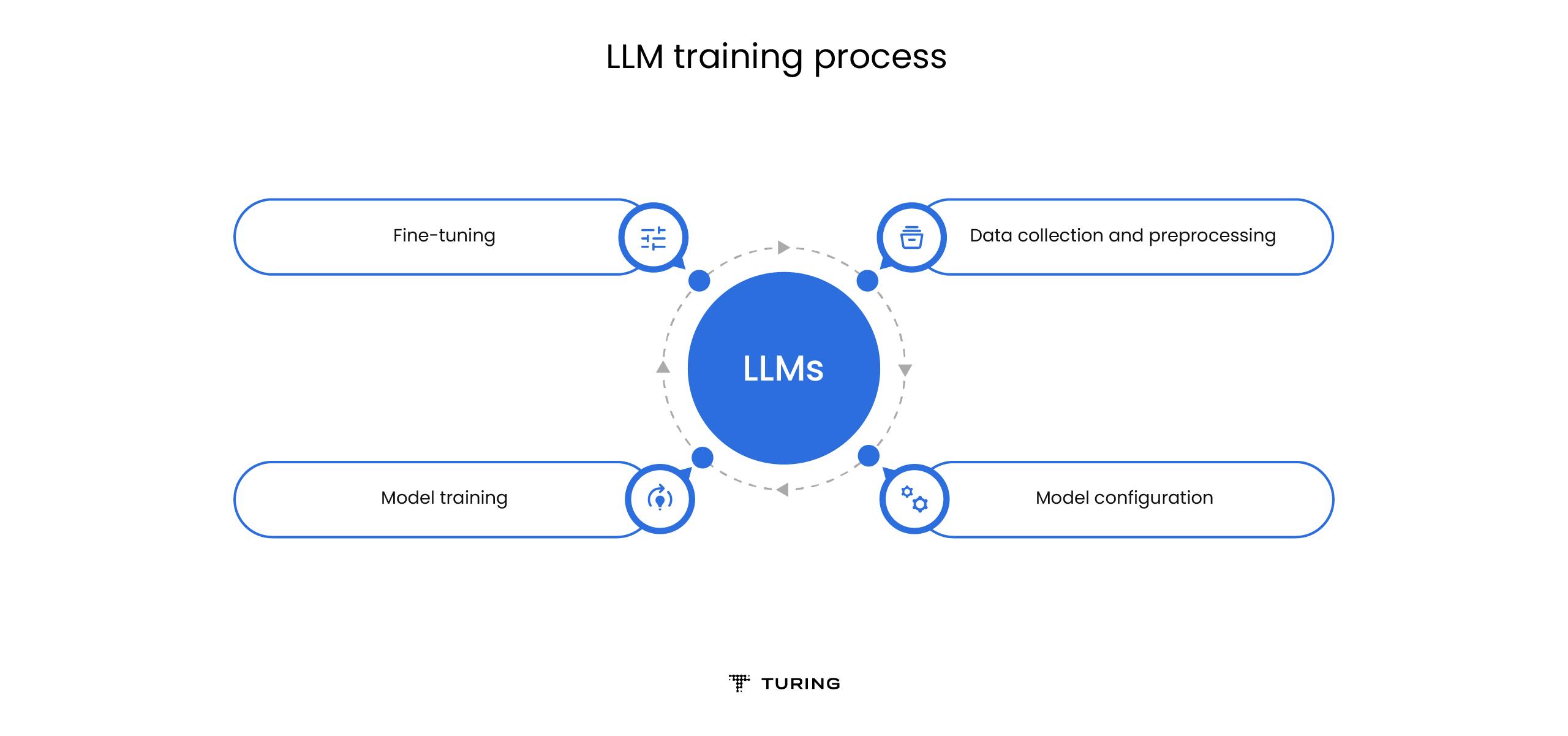

How does LLM training work?

Training LLMs is a complex process divided into several key stages. Understanding these steps is crucial for building LLMs or improving upon existing models.

a. Data collection and preprocessing

The initial step in training an LLM is data collection and preparation. This data is sourced from various repositories, including scientific articles, websites, books, and curated databases. However, you need to clean and preprocess the raw information before using it to train the models. This involves removing unwanted noise, rectifying format inconsistencies, and discarding irrelevant details to prepare the data for tokenization.

During tokenization, the text is segmented into smaller, manageable units such as words or subwords. Techniques like Byte-Pair Encoding or WordPiece break down text into smaller pieces, called tokens, that the neural network uses for learning. These tokens form the foundation of the model's vocabulary and are critical for the model to start predicting language patterns.

b. Model configuration

After preprocessing the data, the next step is to configure the model, typically a transformer-based neural network due to its proven efficacy in natural language processing (NLP) tasks. Setting up this architecture involves specifying various parameters, such as the number of layers in the transformer blocks, the number of attention heads, and a variety of hyperparameters. Researchers extensively test different settings to find the best configuration that helps the model learn efficiently.

c. Model training

Once configuration is complete, the model enters the training phase where it’s exposed to the prepared text data. The model's objective is to predict the next word in a sequence, given the preceding words. When it predicts correctly, the model's internal weights are reinforced; when it errs, the weights are adjusted. This process contains countless iterations—spanning millions or even billions of instances across a huge dataset to refine the model's predictive capabilities.

d. Fine-tuning

As the model begins to form a basic understanding of language patterns from the extensive data it's exposed to, you can apply a higher level of structure to the model’s learning process with supervised fine-tuning (SFT). Instead of simply predicting the next word, the model learns to understand and generate responses aligned with human-provided examples. The SFT phase trains the model to provide accurate and contextually appropriate responses, which is essential for tools such as chatbots or virtual assistants. The model can undergo additional training with more data to improve its predictive accuracy and generalization capabilities.

Reinforcement learning from human feedback (RLHF) is an advanced stage of fine-tuning in which the model's responses are fine-tuned by comparing them with human preferences to ensure they are helpful, honest, and harmless (HHH). The model learns to prioritize better responses by creating various possible answers for a given input and having human labelers rank these from best to worst.

The RLHF process trains a reward model that understands the nuances of effective and ethical human conversation. Once trained, the reward model can replace human judgment at scale by providing feedback that helps tune the LLM to produce high-quality, valuable responses while avoiding misinformative or potentially harmful content.

In the next section, we'll delve deeper into the specific challenges presented by these stages and how they contribute to the broader complexities of LLM training.

Challenges of training LLMs

a. Data generation and validation

The data used for training LLMs must be carefully collected from diverse sources and then cleaned and organized into training, validation, and testing sets. Additionally, it needs to be reviewed for representation, bias, and ethical soundness. Building a data pipeline with the right labels and annotations is a time- and resource-intensive process, which makes it challenging for researchers and practitioners.

Here are some of the ways to approach data generation and validation concerns:

- Creative strategies for dataset expansion: Employ techniques like back-translation, word replacement, and sentence shuffling to add richness and variety to your existing data.

- Utilize available resources: Public datasets relevant to your task can serve as a solid starting point. They are freely accessible and can reduce the burden of initial data collection.

- Crowdsourcing and expert review: Leverage online platforms to engage with different people who can help collect and label data to increase the diversity of your training dataset. Additionally, using human reviewers to monitor and moderate model’s outputs can ensure the model learns from human feedback and generates the desired output.

- Enhancing model integrity: Implement strict data filtering and preprocessing routines to eliminate any offensive or inappropriate content. Incorporating adversarial training methods that expose the model to challenging examples can enhance its resilience against toxic inputs. Additionally, you can avoid biases by fine-tuning the model using curated datasets.

b. Optimizing reasoning capabilities

Developing LLMs that can process information and reason with humanlike logic remains one of the most advanced challenges in AI today. Models can understand and produce logic by recognizing language patterns, but true reasoning involves combining facts to draw conclusions.

One focused approach is to teach models the intricacies of coding. Coding represents a structured way of thinking, where logical operations are understood and applied in a precise sequence. This teaches the model systematic thinking, helping it to identify errors and figure out how to fix them, much like a coder debugging a program.

Another approach is RLHF which can boost a model's reasoning by incorporating human feedback into its learning process. "Chain-of-thought" prompting is another method that guides LLMs in the reasoning process by following logic examples.

DeepMind, for example, built a "faithful reasoning" framework for question-answering systems. It uses two specialized LLMs, one for selecting accurate information and another for inference, to construct a solid chain of logic. Each step in the process leads to the creation of a valid reasoning trace. For tasks that require multi-step deduction and scientific question answering, DeepMind's framework exceeds basic models in providing accurate final answers and generates easy-to-understand reasoning traces.

Despite these efforts, LLMs may falter as complexity grows, such as with natural language arithmetic questions. Large-scale neuro-symbolic AI systems like AI21 Labs' MRKL use a modular approach to reasoning that combines knowledge, computation, and natural language expression. This approach takes advantage of the full power of modern LLMs while overcoming their limitations, such as outdated or exclusive data and shortcomings in symbolic reasoning.

Finding effective ways to optimize reasoning capabilities, particularly through coding, can be extrapolated to broader logical applications. This enhances the model's problem-solving abilities and establishes a level of trust and reliability in the LLMs' capabilities.

c. Bias and hallucinations

Training LLMs presents two key challenges that can affect the model’s reliability and utility: bias and hallucinations. Even with extensive training on vast datasets, LLMs can still mimic deeply rooted biases present in the data and generate outputs that may reflect societal biases and distort the model's responses. Biases in AI can stem from various sources, including non-representative data reflecting societal norms, selection bias from limited data varieties, algorithmic bias from flawed processing, and biases rooted in race, culture, and politics. These biases can significantly alter how the AI understands and interprets the world.

Bias in LLMs can be eliminated by:

- conducting thorough audits of the training datasets for equitable representation.

- developing and applying algorithms that neutralize latent biases in data.

- regular monitoring and iterative updates to the model to rectify any newly identified biases.

Hallucinations happen when LLMs get things wrong—producing information that diverges from reality. This can happen if the AI gets confused by the context or if there's missing information. These false outputs are incorrect and can be misleading when the stakes are high.

Hallucinations in LLMs can be prevented by:

- improving the neural network’s architecture for better context processing.

- enhancing the training data to provide a more accurate basis for predictions.

- involving human judgment in evaluating LLM output, especially for sensitive use cases.

By addressing bias and hallucinations, companies can create models that are efficient, fair, and reliable—qualities essential for the responsible long-term use of the technology.

Want ethical AI that works in the real world?

Ensure Model Safety with Turingd. Quality control and monitoring

Building a robust quality control setup is tough, whether you're tweaking an existing model or building one from scratch. The success of an LLM largely depends on its training data.; the model can only fulfill its full potential if the data is carefully preprocessed, diverse, and representative. This approach has proven successful by BloombergGPT, which was trained using a curated dataset consisting of 51 percent financial data and 49 percent general data. This strategy led to the model’s remarkable performance in financial tasks without compromising general LLM benchmarks.

Similarly, the Med-PaLM 2 model showcases the importance of quality input and stringent review by becoming the first LLM to pass the U.S. Medical Licensing Examination (USMLE), with a score of 67.2 percent on the MedQA dataset. The model underwent rigorous human evaluations that particularly focused on its clinical utility by assessing long-form medical questions across various dimensions.

Here’s a three-step approach to setting up effective quality control and monitoring:

Establish the right evaluation metrics: These metrics serve as benchmarks during and after training to ensure the LLM meets the defined quality standards. Some of the common metrics used to evaluate LLMs include:

a. Perplexity quantifies how uncertain a model is about the predictions it makes or measures the model's confidence in generating the next part of the text. A lower perplexity score indicates that the model is better at predicting the sample.

b. ROUGE (recall-oriented understudy for gisting evaluation) compares the overlap of word sequences between the output of the LLM and a human-produced reference text. This metric gauges the quality of machine-generated summaries or translations in terms of their alignment with human expectations.

c. F1 Score measures a model’s accuracy by considering both precision (how many selected items are relevant) and recall (how many relevant items are selected). This score is useful when it's crucial to manage both false positives and false negatives effectively.

How does your model measure up?

Evaluate Your Model NowContinuous evaluation and iteration: Throughout training, the model is continuously assessed and fine-tuned to ensure each iteration brings the model closer to the desired level of performance. You can use libraries like Evaluate and TensorFlow Model Analysis (TFMA) for evaluating models and datasets.

Post-training review and feedback integration: Post-training evaluations, complemented by feedback from users, provide insights for refining the LLMs to make it robust and relevant.

Despite the importance of manual review in maintaining quality, there's a push to strike the right balance between using automated systems and human oversight. This balance is crucial for achieving scalable, efficient, and high-quality LLMs that can be integrated into real-world applications.

e. Technical expertise and operations management

Training and deploying LLMs requires a deep understanding of the technical aspects, such as deep-learning algorithms and transformers. Furthermore, it requires proficiency in coordinating the activities of numerous GPUs and managing the vast software and hardware intricacies.

- Scaling teams rapidly: The ongoing evolution of LLM training projects and new workstreams demands the rapid scaling of teams with skilled LLM trainers and domain experts to maintain an edge in GenAI development. Training LLMs requires professionals with expertise in complex algorithms, model architectures, data cleaning, and model fine-tuning. However, due to the recent advancements in LLM technology, there's still an evident gap between the available skilled workforce and the growing demand that results in competitive hiring environments.

LLM training services have grown increasingly valuable by filling the gaps with the expertise needed to train, deploy, and maintain advanced LLMs. Beyond hiring, it's important to train existing employees on AI and ensure they know how to effectively use the tools, understand the limitations of LLMs, and integrate them into everyday tasks. Business leaders play a crucial role in this process by creating clear usage policies and offer ongoing training to maintain proficiency with these technologies. - Managing the operations: Running an LLM training and development team means investing in the support and guidance of each team member. It involves training developers, resolving their queries, overseeing output quality, and keeping them invested in their work. A developer success team is key to keeping teams motivated and productive by evaluating performance and encouraging a culture that promotes independent exploration and adoption of new AI tools in business processes.

Wrapping up

LLM training is a complex process that demands precision, innovative strategies, and a proactive approach to technology's ever-evolving landscape. Reflecting on the current limitations of LLMs, Yann LeCun, a Turing Award-winning machine learning researcher, observes, “It is clear that these models are doomed to a shallow understanding that will never approximate the full-bodied thinking we see in humans.” It’s critical to recognize the limitations of what AI can and cannot know through language alone. Companies must overcome biases, strengthen data quality, enhance reasoning skills, and manage both technical operations and talent to leverage the full potential of LLMs.

Turing emerges as the go-to partner for LLM development services, bringing experience from serving over 1,000 clients, including top foundational LLM companies. We specialize in advancing models’ reasoning and coding abilities. Our strength lies in generating high-quality, proprietary human data essential for supervised fine-tuning (SFT), reinforcement learning from human feedback (RLHF), and direct preference optimization (DPO).

With our unique combination of AI-accelerated delivery, on-demand tech talent, and customized solutions, Turing can deliver the expertise and data you need to power your LLM strategies.

Talk to an expert today!

Training LLMs right takes more than just compute

Get expert support across data prep, fine-tuning, alignment, and evaluation—so you can build models that actually work in the real world.

Explore LLM Training Services