Reinforcement Learning: What It Is, Algorithms, Types and Examples

•6 min read

- Languages, frameworks, tools, and trends

Machine learning is a subset of artificial intelligence where most of the algorithms are implemented using supervised and unsupervised learning. These algorithms are usually linear models like linear regression and logistic regression as well as a few nonlinear ones like support vector machines. Apart from these, there is another category called reinforcement learning. In this article, we will learn what reinforcement learning is along with its types and its applications.

Reinforcement learning: Neither supervised nor unsupervised

Reinforcement learning is one of the types of machine learning algorithms that can’t be classified as a supervised or unsupervised algorithm. It falls somewhere between them. It requires labeled datasets and thus, can’t be classified as a supervised algorithm. On the other hand, it aims to classify data points and therefore, can’t be classified as an unsupervised algorithm.

The basic idea behind implementing reinforcement learning is that it has to correct the actions taken in different scenarios by using the concept of trial and error. It is trained on datasets involving real-life situations. Based on them, it determines a few actions for which it either receives rewards or penalties. The overall aim is to maximize the reward as much as possible.

Reinforcement learning can be used in a variety of applications, such as game playing, robotics, and autonomous driving. It is also used to solve complex problems in areas like finance, healthcare, and energy management.

How does reinforcement learning work?

The key components of a reinforcement learning system are the agent, the environment, and the reward signal. The agent learns to take actions based on its current state and the reward signal it receives from the environment. The environment determines the outcomes of the agent's actions and provides feedback in the form of the reward signal. The reward signal is a scalar value that reflects how well the agent is doing in achieving its goal.

There are several algorithms that can be used to train reinforcement learning agents, such as Q-learning, policy gradient methods, and actor-critic methods. These algorithms differ in how they estimate the expected cumulative reward and update the agent's policy.

One of the challenges of reinforcement learning is the exploration-exploitation trade-off. The agent must balance between taking actions that have high expected rewards based on its current knowledge (exploitation) and taking actions that may lead to new information and potentially higher rewards in the future (exploration).

Another challenge is the curse of dimensionality, where the number of possible states and actions in a complex environment can be very large, making it difficult to learn an optimal policy. This challenge can be addressed by using function approximation or other techniques to reduce the dimensionality of the problem.

Types of reinforcement learning models and frameworks

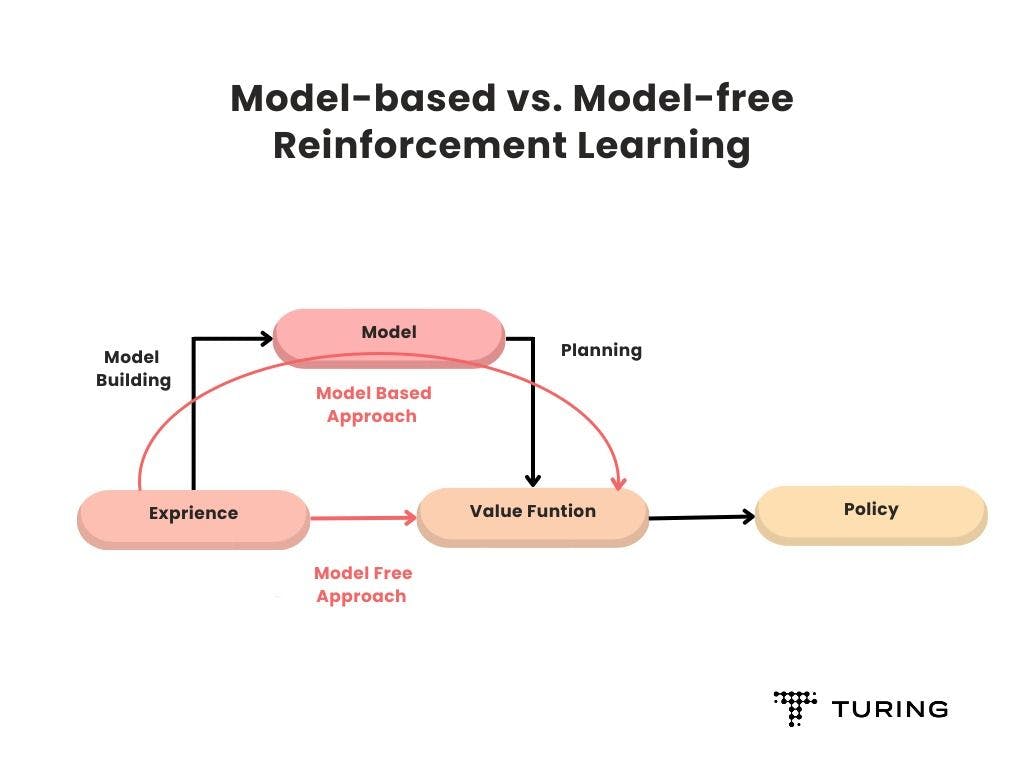

In this section, we will discuss two main types of algorithms: model-free and model-based reinforcement learning.

Model-based algorithms

In model-based algorithms, the agent is able to predict the reward of an outcome and takes the action in order to maximize the reward. It is a greedy algorithm where the decision is entirely based on maximizing the rewards points.

It is used in situations where we have complete knowledge about an environment and the outcome of the actions in that environment. For environments that are fixed or static in nature, model-based algorithms are more suitable. They also allow agents to plan ahead.

Model-free algorithms

In model-free algorithms, the agent carries out multiple actions multiple times and learns from the outcomes. Based on the learning experience, it tries to decide a policy or a strategy to carry out actions with an aim to get optimal reward points. This type of algorithm should be applied to environments with a dynamic nature and where we don’t have complete knowledge about them.

A reinforcement learning example is autonomous driving cars that have a dynamic environment where there can be a lot of changes in traffic routes. Model-free algorithms are most suitable in such situations.

Reinforcement learning techniques

Following are the list of various reinforcement learning techniques:

- Markov decision process (MDP)

- Bellman equation

- Dynamic programming

- Value iteration

- Policy iteration

- Q-learning.

We will discuss MDP, value iteration, and policy iteration techniques in detail.

Markov decision process

This algorithm is designed to make a sequence of decisions that interact with the environment over time. The agent periodically provides information about the state of the environment to guide its decisions. After taking an action, the environment transitions to a new state and rewards are given based on the previous action.

The process repeats, creating a trajectory of actions, rewards, and states. The algorithm does not just focus on maximizing current rewards, but aims to maximize overall rewards. This is advantageous because it avoids a greedy approach that only seeks maximum reward for the current state. Instead, the algorithm may opt for a small reward if it can lead to a greater overall reward.

Value iteration

This technique involves obtaining the optimal policy, which refers to the best action for a given state, by selecting the action that maximizes the optimal state-value function for that state. This optimal state-value function is computed using an iterative process. The algorithm is called value iteration due to this approach.

The method initializes the state-value function (V) with random values and then iteratively improves its estimate until convergence. During each iteration, both the Q(s,a) and V(s) values are updated. Value iteration guarantees the best possible results by optimizing the state-value function until it converges to an optimal solution.

Policy iteration

Policy in reinforcement learning can be defined as the action that has to be taken to maximize the reward. There are two phases of this algorithm: (a) policy evaluation (b) policy improvement.

(a) Policy evaluation: Used for computing the values for different states of the environment using the policy.

(b) Policy improvement: The next step after policy evaluation, which monitors the state's values and tries to improve the policies to get higher values.

Initially, the agent will assign a random policy that will result in values of the state. Then, policy improvement will try to improve the policy and assign a new value to the state. The algorithm will keep working back and forth between the two phases until the optimal value of the state is found.

Applications of reinforcement learning

Apart from its application in self-driving cars, there are other areas where reinforcement learning is used. Let's discuss them in brief:

1. Natural language processing: Within NLP, reinforcement learning is used for many different purposes like chatbots for question answering, summarizing long texts in a few sentences, and in virtual assistants.

2. Healthcare: Reinforcement learning is a continuously researched area in healthcare. Bots that are equipped with biological information are trained extensively to perform precision surgeries. These RL bots assist in improving disease diagnosis, predicting the onset of diseases when treatment is delayed, and more.

3. Robotics and industries: Deep reinforcement learning is widely used in robotics due to the sequential nature of robot actions. These agents are capable of learning to interact with dynamic environments which makes them useful for various applications in industrial automation and manufacturing.

The implementation of deep reinforcement learning has led to a significant reduction in labor expenses, product faults, and unexpected downtime. Additionally, it has improved production speed and transition times.

Conclusion

To sum up, reinforcement learning is a third category of machine learning algorithms that cannot be classified as a supervised or unsupervised algorithm. It is entirely based on learning from previous experiences with the aim to maximize the reward.

As discussed, there are two major categories of reinforcement learning algorithms: the model-based category, which is a greedy algorithm with the aim to maximize the reward at each step, and the model-free category, which is not a greedy algorithm and focuses on maximizing the overall reward but for each stage.

Author

Turing Staff