Microservices

Basic Interview Q&A

1. What are microservices? How do they differ from monolithic architecture?

Microservices are a software architectural style in which an application is divided into small, loosely coupled services that can be developed, deployed, and maintained independently. Each service in a microservices architecture focuses on a specific business capability and communicates with others through well-defined APIs.

In contrast, monolithic architecture involves building an application as a single, interconnected unit. All the components are tightly integrated, making it challenging to scale and modify individual parts independently.

2. What are the main advantages of using microservices?

The main advantages of using microservices are:

Scalability: Microservices allow individual components to be scaled independently based on demand which optimizes resource utilization.

Flexibility: Developers can use different programming languages, databases, and technologies for each microservice, enabling the use of the best tool for each task.

Continuous delivery: Microservices promote faster development and deployment cycles, enabling continuous integration and deployment (CI/CD) practices.

Fault isolation: Issues in one microservice do not affect the entire application, which enhances fault isolation and system resilience.

Team autonomy: Microservices enable multiple teams to work independently on different services, which enhances development speed and promotes innovation.

3. What are the main components of Microservices?

Microservices consists of:

- Containers, Clustering, and Orchestration

- IaC [Infrastructure as Code Conception]

- Cloud Infrastructure

- API Gateway

- Enterprise Service Bus

- Service Delivery

4. Explain the characteristics of a well-designed microservices architecture.

A well-designed microservices architecture typically exhibits the following characteristics:

Single responsibility principle: Each microservice focuses on a specific business capability, keeping it small and well-defined.

Loose coupling: Microservices communicate through well-defined APIs, reducing dependencies between components.

Independent deployment: Each microservice can be deployed independently, enabling faster updates and reducing the risk of system-wide failures.

Resilience: The architecture includes mechanisms to handle failures gracefully and recover from errors without impacting the entire system.

Scalability: Microservices allow horizontal scaling of individual components, ensuring efficient resource utilization.

Polyglot persistence: Different microservices can use their databases, choosing the best-suited data storage for their needs.

Monitoring and observability: The architecture includes robust monitoring and logging capabilities to facilitate debugging and performance optimization.

5. How does microservices architecture promote continuous integration and continuous deployment (CI/CD)?

Microservices architecture promotes CI/CD by facilitating the independent development and deployment of each service. Since microservices are loosely coupled, teams can work on them independently. This makes it easier to add new features, fix bugs, and perform updates without affecting the entire system.

CI/CD pipelines can be set up for individual microservices, allowing automated testing, integration, and deployment. With smaller codebases and well-defined boundaries between services, it becomes faster and safer to deliver changes to production. This approach also supports frequent releases and enables rapid feedback loops for developers, reducing the time to market new features and improvements.

6. What are the key challenges in migrating from a monolithic architecture to microservices?

Migrating from a monolithic architecture to microservices can be challenging due to the following key factors:

Decomposition complexity: Identifying the right service boundaries and breaking down a monolith into cohesive microservices requires careful analysis and planning.

Data management: Handling data in a distributed environment becomes more complex as transactions may span multiple microservices.

Inter-service communication: Ensuring efficient and reliable communication between microservices is crucial to avoid performance bottlenecks and failure cascades.

Operational overhead: Managing multiple services, monitoring, and logging can increase operational complexity, which requires robust DevOps practices.

Testing: Testing strategies need to evolve to handle integration testing, contract testing, and end-to-end testing across multiple services.

Consistency: Ensuring consistency across microservices, especially during data updates, is challenging.

7. While using Microservices, mention some of the challenges you have faced.

*To answer a Microservice interview question like this, you should list down the blockers you have personally faced while using the technology and how you overcame these challenges. *

Some of the common challenges that developers face while using Microservices are:

- Microservices are constantly interdependent. As a result, they must communicate with one another.

- It's a complicated model because it's a distributed system.

- If you're going to use Microservice architecture, be prepared for some operational overhead.

- To handle heterogeneously dispersed Microservices, you'll require trained people.

At the end of this Microservice interview question, make sure to talk/ask about how to tackle these roadblocks.

8. Describe the role of Docker in microservices deployment.

Docker plays a vital role in microservices deployment by providing containerization. Each microservice and its dependencies are packaged into lightweight, isolated containers. Docker ensures that each container runs consistently across different environments such as development, testing, and production. This avoids the notorious "it works on my machine" issue.

Containers simplify the deployment process as they encapsulate all the necessary dependencies, libraries, and configurations needed to run a microservice. This portability ensures seamless and consistent deployment across various infrastructure setups, making scaling and maintenance more manageable.

9. What is the purpose of an API gateway in microservices?

An API gateway in microservices acts as a central entry point that handles client requests and then routes them to the appropriate microservices. It serves several purposes:

Aggregation: The API gateway can combine multiple backend microservices' responses into a single cohesive response to fulfill a client request. This reduces round-trips.

Load balancing: The gateway can distribute incoming requests across multiple instances of the same microservice to ensure optimal resource utilization and high availability.

Authentication and authorization: It can handle security-related concerns by authenticating clients and authorizing access to specific microservices.

Caching: The API gateway can cache responses from microservices to improve performance and reduce redundant requests.

Protocol translation: It can translate client requests from one protocol (e.g., HTTP/REST) to the appropriate protocol used by the underlying microservices.

10. List down the main features of Microservices.

Some of the main features of Microservices include:

- Decoupling: Services are generally disconnected inside a system. As a result, the application as a whole may be simply built, modified, and scaled.

- Componentization: Microservices are considered discrete components that can be simply swapped out or improved.

- Business Capabilities: Microservices are small and focused on a single service.

- Team autonomy: Each developer works autonomously, resulting in a shorter project timeframe.

- Continuous Delivery: Enables frequent software releases by automating the development, testing, and approval of software.

- Responsibility: Microservices aren't focused on projects as much as they are on applications. Rather, they consider apps to be products for which they are responsible.

- Decentralized Governance: The objective is to select the appropriate tool for the job. Developers have the option of selecting the finest tools to tackle their issues.

- Agility: Microservices allow for more agile development. It is easy to swiftly add new features and then remove them at any moment.

11. How do microservices ensure fault tolerance and resilience in distributed systems?

Microservices promote fault tolerance and resilience through several techniques:

Redundancy: By replicating microservices across multiple instances and possibly different data centers, the system can continue functioning even if some instances fail.

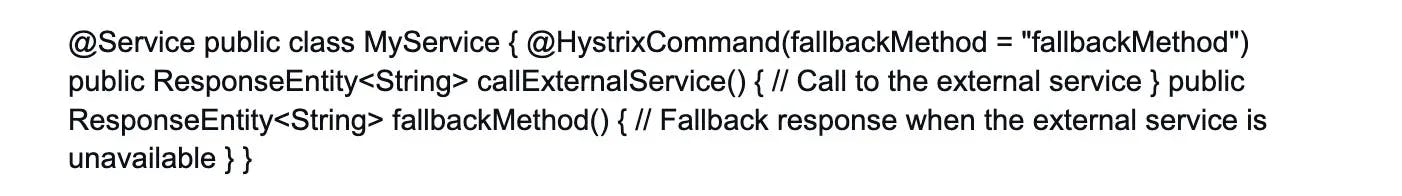

Circuit breaker pattern: Microservices implement circuit breakers to prevent cascading failures. If a microservice experiences issues, the circuit breaker stops further requests, providing a fallback response or error message.

Bulkheads: Microservices are isolated from each other. Failures in one service don't affect others, containing potential damage.

Graceful degradation: In the face of service degradation or unavailability, microservices can gracefully degrade their functionality or provide limited but essential features.

Timeouts: Setting appropriate timeouts for communication between microservices ensures that resources are not tied up waiting indefinitely.

12. What do you understand about Cohesion and Coupling?

Coupling

Coupling is the relationship between software modules A and B, as well as how dependent or interdependent one module is on the other. Couplings are divided into three groups. Very connected (highly reliant) modules, weakly coupled modules, and uncoupled modules can all exist. Loose coupling, which is performed through interfaces, is the best type of connection.

Cohesion

Cohesion is a connection between two or more parts/elements of a module that have the same function. In general, a module with strong cohesion may effectively execute a given function without requiring any connection with other modules. The module's functionality is enhanced by its high cohesiveness.

13. Why are reports and dashboards important in Microservices?

Reports and dashboards are commonly used to monitor a system. Microservices reports and dashboards can assist you in the following ways:

- Determine which resources are supported by which Microservices.

- Determine which services are impacted when components are changed or replaced.

- Make documentation accessible at all times.

- Examine the component versions that have been deployed.

- Determine the components' maturity and compliance levels.

14. What are the essential components of microservices communication?

The essential components of microservices communication include:

APIs (application programming interfaces): Microservices communicate with each other through well-defined APIs, enabling loose coupling and interoperability.

Message brokers: In asynchronous communication, message brokers (e.g., RabbitMQ, Apache Kafka) facilitate passing messages between microservices.

REST (representational state transfer): RESTful APIs are widely used for synchronous communication, allowing services to exchange data over standard HTTP methods.

Service discovery: Microservices need a mechanism to discover each other dynamically in a changing environment. Tools like Consul or Eureka assist with service registration and discovery.

Event streaming: For real-time data processing and event-driven architectures, tools like Kafka or Apache Pulsar are used to stream events between microservices.

15. Discuss the relationship between Microservices and DevOps.

Microservices and DevOps are closely related and often go hand in hand.

Faster deployment: Microservices' smaller codebases and well-defined boundaries enable rapid development and deployment. These align well with DevOps’ principles of continuous integration and continuous deployment (CI/CD).

Automation: Microservices and DevOps rely heavily on automation. Microservices encourage automation for testing, deployment, and scaling, while DevOps emphasizes automating the entire software delivery process.

Collaboration: The microservices approach breaks down monolithic barriers, enabling smaller, cross-functional teams that work collaboratively. DevOps also emphasizes collaboration between development, operations, and other stakeholders.

Resilience and monitoring: DevOps principles of monitoring and observability align with the need for resilient microservices where continuous monitoring helps identify and address issues promptly.

16. How do you decide the appropriate size of a microservice, and what factors influence this decision?

Deciding the appropriate size of a microservice is crucial for a well-designed architecture. Factors that influence this decision include:

Single responsibility principle: A microservice should focus on a single business capability, keeping it small and manageable.

Domain boundaries: Defining microservices based on clear domain boundaries ensures better separation of concerns.

Scalability: Consider the expected load on the service. If a component needs frequent scaling, it might be a candidate for a separate microservice.

Data management: If different parts of the system require separate data storage technologies or databases, it might be an indicator to split them into separate microservices.

Development team autonomy: Smaller teams can work more efficiently, so splitting services to align with team structures can be beneficial.

Deployment frequency: If different parts of the system require separate deployment frequencies, it could be a sign that they should be separate microservices.

17. Explain the principles of Conway's Law and its relevance in microservices architecture.

Conway's Law states that the structure of a software system will mirror the communication structures of the organization that builds it. In the context of microservices architecture, this means that the architecture will reflect the communication and collaboration patterns of the development teams.

In practice, this implies that if an organization has separate teams with different areas of expertise (e.g., front-end, back-end), the architecture is likely to have distinct microservices that align with these specialized teams. On the other hand, if teams are organized around specific business capabilities, the architecture will consist of microservices that focus on those capabilities.

Understanding Conway's Law is crucial for effective microservices design as it emphasizes the importance of communication and collaboration within the organization to ensure a well-structured and coherent microservices architecture.

18. What is the role of service registration and discovery in a containerized microservices environment?

In a containerized microservices environment, service registration and discovery play a vital role in enabling dynamic communication between microservices. Here's how they work:

Service registration: When a microservice starts up, it registers itself with a service registry (e.g., Consul, Eureka) by providing essential information like its network location, API endpoints, and health status.

Service discovery: When a microservice needs to communicate with another microservice, it queries the service registry to discover the network location and endpoint details of the target service.

This dynamic discovery allows microservices to locate and interact with each other without hardcoding their locations or relying on static configurations. As new instances of services are deployed or removed, the service registry is updated accordingly. This ensures seamless communication within the containerized environment.

19. Discuss the importance of automated testing in microservices development.

Automated testing is of paramount importance in microservices development due to several reasons:

Rapid feedback: Microservices often have frequent releases. Automated tests enable quick feedback on the changes made, allowing developers to catch and fix issues early in the development process.

Regression testing: With each service developed independently, changes in one service may affect others. Automated testing ensures that changes in one service do not introduce regressions in the overall system.

Integration testing: Microservices rely heavily on inter-service communication. Automated integration tests verify that services interact correctly and data flows seamlessly between them.

Scalability testing: Automated tests can simulate heavy loads and traffic to evaluate how well the architecture scales under stress.

Isolation: Automated tests provide isolation from external dependencies, databases, and other services, ensuring reliable and repeatable test results.

20. How is WebMvcTest annotation used in Spring MVC applications?

When the test purpose is to focus on Spring MVC Components, the WebMvcTest annotation is used for unit testing in Spring MVC Applications.

In the following code:

@WebMvcTest(value =ToTestController.class, secure = false):

We simply want to run the ToTestController here. Until this unit test is completed, no more controllers or mappings will be deployed.

21. Do you think GraphQL is the perfect fit for designing a Microservice architecture?

GraphQL hides the fact that you have a microservice architecture from the customers, therefore, it is a wonderful match for microservices. You want to break everything down into microservices on the backend, but you want all of your data to come from a single API on the frontend. The best approach to achieve both is to use GraphQL. It allows you to break up the backend into Microservices while still offering a single API to all of the apps and allowing data from multiple services to be joined together.

22. How can you handle database management efficiently in microservices?

Efficient database management in microservices can be achieved through these strategies:

Database per service: Each microservice should have its database to ensure loose coupling between services and avoid complex shared databases.

Eventual consistency: In distributed systems, ensuring immediate consistency across all services can be challenging. Embrace the concept of eventual consistency to allow data to propagate and synchronize over time.

Sagas: Implementing sagas (a sequence of local transactions) can maintain data consistency across multiple services, even in the face of failures.

CQRS (Command Query Responsibility Segregation): CQRS separates read and write operations, allowing the use of specialized databases for each. This optimizes read and write performance and simplifies data models.

Event sourcing: In event-driven architectures, event sourcing stores all changes to the data as a sequence of events to allow easy rebuilding of state and auditing.

23. Explain the benefits and challenges of using Kubernetes for microservices orchestration.

Benefits of using Kubernetes for microservices orchestration:

Container orchestration: Kubernetes simplifies the deployment and management of containers. It handles scaling, load balancing, and self-healing.

High availability: Kubernetes supports multiple replicas of services, ensuring high availability and fault tolerance.

Auto-scaling: Kubernetes can automatically scale services based on CPU utilization or custom metrics to optimize resource usage.

Service discovery: Kubernetes provides built-in service discovery and DNS resolution for communication between services.

Challenges of using Kubernetes for microservices orchestration:

Learning curve: Kubernetes has a steep learning curve and managing it requires a good understanding of its concepts and components.

Infrastructure complexity: Setting up and managing a Kubernetes cluster can be complex and resource-intensive.

Networking: Configuring networking for microservices in Kubernetes can be challenging, especially when spanning multiple clusters or environments.

Resource overhead: Kubernetes itself adds resource overhead, which might be significant for smaller applications.

24. What are the best practices for securing communication between microservices?

To secure communication between microservices, consider the following best practices:

Transport Layer Security (TLS): Enforce TLS encryption for communication over the network to ensure data confidentiality and integrity.

Authentication and authorization: Implement strong authentication mechanisms to verify the identity of microservices. Use access control and role-based authorization to restrict access to sensitive APIs.

Use API gateways: Channel all external communication through an API gateway. You can centralize security policies and add an extra layer of protection.

Secure service-to-service communication: When microservices communicate with each other internally, use Mutual Transport Layer Security (mTLS) to authenticate both ends of the connection.

Service mesh: Consider using a service mesh like Istio or Linkerd which provides advanced security features like secure service communication, access control, and traffic policies.

API security: Use API keys, OAuth tokens, or JWT (JSON Web Tokens) to secure APIs and prevent unauthorized access.

25. Explain Materialized View pattern.

When we need to design queries that retrieve data from various Microservices, we leverage the Materialized View pattern as a method for aggregating data from numerous microservices. In this method, we create a read-only table with data owned by many Microservices in advance (prepare denormalized data before the real queries). The table is formatted to meet the demands of the client app or API Gateway.

One of the most important points to remember is that a materialized view and the data it includes are disposable since they may be recreated entirely from the underlying data sources.

26. How does microservices architecture facilitate rolling updates and backward compatibility?

Microservices architecture facilitates rolling updates and backward compatibility through the following mechanisms:

Service isolation: Microservices are isolated from each other, allowing individual services to be updated without affecting others.

API versioning: When introducing changes to APIs, versioning enables backward compatibility by allowing both old and new versions of APIs to coexist until all consumers can transition to the new version.

Semantic versioning: Following semantic versioning guidelines (major.minor.patch) ensures predictability in how versions are updated and signals breaking changes and backward-compatible updates.

Feature flags: Feature flags or toggles allow the gradual release of new features, giving teams control over when to enable or disable functionalities.

Graceful degradation: In case of service unavailability, services can degrade gracefully and provide a limited but functional response to maintain overall system stability.

27. Are containers similar to a virtual machine? Provide valid points to justify your answer.

No, containers are very different from virtual machines. Here are the reasons why:

- Containers, unlike virtual machines, do not need to boot the operating system kernel, hence they may be built-in under a second. This characteristic distinguishes container-based virtualization from other virtualization methods.

- Container-based virtualization provides near-native performance since it adds little or no overhead to the host computer.

- Unlike previous virtualizations, container-based virtualization does not require any additional software.

- All containers on a host computer share the host machine's scheduler, reducing the need for additional resources.

- Container states are tiny in comparison to virtual machine images, making them simple to distribute.

- Cgroups are used to control resource allocation in containers. Containers in Cgroups are not allowed to utilize more resources than they are allotted.

28. What is the role of a message broker in asynchronous microservices communication?

A message broker plays a crucial role in enabling asynchronous communication between microservices. It acts as an intermediary that facilitates the exchange of messages between microservices without requiring them to interact directly in real time.

Here's how it works:

- When a microservice wants to communicate with another microservice, it sends a message to the message broker.

- The message broker stores the message temporarily and ensures its delivery to the destination microservice.

- The receiving microservice processes the message whenever it's ready and acknowledges its consumption back to the message broker.

- The message broker can also handle message queuing, message filtering, and routing based on specific criteria.

Using a message broker decouples microservices. It allows them to work independently and asynchronously, improving system responsiveness and fault tolerance.

29. Describe the concept of API-first design and its impact on microservices development.

API-first design is an approach where the design of APIs (application programming interfaces) drives the entire software development process. It emphasizes defining the API contract and specifications before implementing the underlying logic.

In the context of microservices development, API-first design has several impacts:

Clear communication: Clearly defined API contracts enable effective communication between microservices teams and consumers. It prevents misunderstandings and ensures consistent expectations.

Parallel development: The API contract can be shared with consumers early in the development process, allowing parallel development of front-end and back-end services.

Contract testing: API-first design facilitates contract testing where consumers and providers test against the agreed-upon API specifications. This ensures compatibility before actual implementation.

Evolutionary design: APIs can evolve independently of the underlying implementation, allowing seamless updates and improvements without breaking existing consumers.

Reusability: Well-designed APIs can be reused across multiple services, promoting consistency and reducing duplication of effort.

Wrapping up

The software development environment is changing as a result of the innovation provided by microservices architecture for contemporary applications. Understanding the fundamental concepts and difficulties of microservices is essential for success, for both candidates and hiring managers.

Microservices are the software development of the future and they have limitless potential. Adopt this architectural strategy to open up new opportunities for your company. Hire experienced microservices engineers to advance your applications from Turing’s advanced AI backed vetting engine.

Hire Silicon Valley-caliber Microservices developers at half the cost

Turing helps companies match with top quality remote JavaScript developers from across the world in a matter of days. Scale your engineering team with pre-vetted JavaScript developers at the push of a buttton.

Tired of interviewing candidates to find the best developers?

Hire top vetted developers within 4 days.

Leading enterprises, startups, and more have trusted Turing

Check out more interview questions

Hire remote developers

Tell us the skills you need and we'll find the best developer for you in days, not weeks.